Terraform test: How , and what else I wish it did

Context

As humans, you want to be able to validate or confirm the entity you created works well in all situations. Be it a piece of code or something else. Infrastructure provisioning has changed from being months of requests/forms being validated and approved to an api call to create or destroy.

HashiCorp Terraform has become a defacto for tooling which helps you model and provision infrastructure or interact with platforms over a common configuration language. The appeal of having a common structure and a three-step process (init, plan , and apply) irrespective of what platform you are interacting with as along as they have a compliant provider is huge. The configuration language you are using is called HashiCorp Configuration Language (HCL). The structure of which looks like below.

1# Arguments

2image_id = "abc123"

3

4# Blocks

5resource "aws_instance" "example" {

6 ami = "abc123"

7

8 network_interface {

9 # ...

10 }

11}

Reference : https://developer.hashicorp.com/terraform/language/syntax/configuration

Problem Statement

For the past few years, when I looked at testing these configurations , I had a few options which looked a lot different from the configuration language I was writing it in.

- Terratest ( A Go library which provides you with patterns and helper functions to test your infra.)

- Deploy the infrastructure manually and validate. ( Exploratory testing in a way)

- Put all the other linters and infrastructure misconfiguration scanning tools here.

The second one was what I resorted to most days since I was not comfortable with Go even if the test structure was fairly easier to use. The mention of a terraform test command made me smile with the possibility of having a native framework to do this. Talking to the folks who were using Terraform , I wasn’t alone with the dilemma.

Test Structure

The command I have been using a lot more than I expected it terraform test. The general options show some subcommands available along with a -cloud--run specific to executing tests on Terraform Cloud (TFC) within the registry.

Lets dig deeper …

At the very core of it, each Terraform test lives in a test file. Terraform discovers test files are based on their file extension: .tftest.hcl or .tftest.json. The command is looking for the tests directly by default unless you specify a directory to look at using the -test-directory. A sample directory structure could be like the one below.

1├── main.tf

2├── outputs.tf

3├── tests

4│ └── main.tftest.hcl

5├── variables.tf

6└── versions.tf

Each test file contains the following root level attributes and blocks:

- One to many

runblocks. - Zero to one

variablesblock. - Zero to many

providerblocks.

The primary block you are interacting or setting up with in this setup is a run block. There are a few defaults which you should know as you work with this. Some allowed inputs you can provide are:

providers

As with any Terraform commands, you would need the provider configuration to be available. It is no different in case of tests as well. You could add the provider details outside the overall run blocks and the test would default to a matching provider name. Be it aws or artifactory, a matching provider configuration in the test file uses it while running the tests.

As of version Terraform 1.7.0 and later, you have the ability to mock providers. This makes it even easier to test your features without having to deploy the resources in case of assertions which can only be done after an apply. I will try to cover the mock providers and overrides in another post.

variables

Variables in general can be injected in multiple ways.

- Set default from the original variable definition.

- variables block within the run block. Consider these like the locally scoped variables in a function.

- variables in the test file which ends in

tftest.hcl. Global variables defined for all tests in the test file. - variables declared as key value entries in

.tfvarsfile which can be used similar to other terraform commands. Global variables defined when the terraform test command is invoked with the-var-fileargument.

command

Command defines the scope of what is available to Terraform during the test. command defaults to apply which means you are provisioning the infrastructure which requires the credentials to the platform you are interacting with, unless you are using a mocked provider ( we will look at this later here).

assert

This is what you came for. This block defines your conditional expression you are validating along with an error message you can provide to the user when it fails. HashiCorp has gradually made the users get used to the assertion structure they are using in the test framework with checks, and pre-/post-conditions; which they introduced the community to in earlier Terraform versions.

1

2 assert {

3 condition = ...

4 error_message = ...

5 }

An example would be:

1 assert {

2 condition = aws_s3_bucket.this.id == "testbucket"

3 error_message = "Bucket name doesn't match the expected value"

4 }

expect_failuires

So what about exceptions you ask. Anyone familiar with the Xunit frameworks which allowed you to test your exception logic, the expect_failures attribute would feel natural. This is primarily useful in case of situations you want to extend the variable validations based on the constraint you have put on it.

For example, we are validating the two adjacent period constraint an S3 bucket name has with the variable validation and an assertion giving that invalid input in the variable block.

1

2# variables.tf

3variable "bucket_name" {

4 type = string

5 validation {

6 condition = !strcontains(var.bucket_name, "..")

7 error_message = "Bucket names must not contain two adjacent periods."

8 }

9}

10

11# *.tftest.hcl

12

13run "variable_validations_periods"{

14 command = plan

15 providers = {

16 aws = aws.mock_aws

17 }

18 variables {

19 bucket_name = "test..bucket"

20 }

21 expect_failures=[var.bucket_name]

22}

These are not the only options or attributes you have within a run block. But you will encounter these more often than others. I plan to add posts around the situations you would use the other attributes. You have the ability to add an optional module block if you require one to be present as a setup to your tests.

Thoughts around testing

As with any test framework, you should consider the three steps:

- Arrange

- Act

- Assert

Or the Gherkin/BDD format Given .. When .. Then..

Arrange

This is primarily the setup stage before tests are run. These could include the provider blocks you want your test files to use, modules which you want to run/setup beforehand, variables which should be available and mocks which you want your run blocks to interact with.

1

2provider "aws" {

3

4}

5

6run "setup"{

7 module {

8 source ="..."

9 }

10}

11

12variables {

13bucket_name = "testbucket"

14}

Act

I would associate this with the command attribute in the run block. It is a plan or apply. The plan_options do exist to review refresh or replace as optional attributes.

Assert

What you want to validate goes here. The assert block or expect_failures attributes map to this stage.

The below repo shows some test examples for a resource which has one input to create an S3 bucket using the aws_s3_bucket resource.

- Reference repo: https://github.com/quixoticmonk/blog-code-references/tree/main/04-11-2024-terraform-test

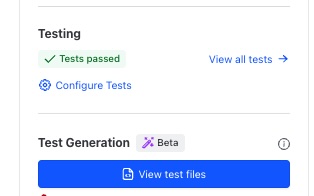

Tests are a wonderful addition to the Terraform configuration which makes it easier for the module developers to have quality baked in. This further connects well with the module registry where you can see the tests executed as part of a remote run as you publish it.

Wishlist

Test suites

It would be great to have a mechanism to allow a suite of tests which execute multiple run blocks across a plan/apply action. Yes, this is available with multiple assert blocks currently under a single run block. But the resulting output of a terraform test run is usually in the form of a single run than showing how many assertions passed. Should I care about the numbers or representation as long as they work ? Maybe not.

The single run block currently does help in avoiding the redundant lines of command=plan/apply input.

1run "positive"{

2

3command=plan

4 assert{...}

5 assert{...}

6 assert{...}

7 assert{...}

8 assert{...}

9}

10

11run "failures"{

12

13command=plan

14 assert{...}

15 assert{...}

16 assert{...}

17}

Few ways of representing this could be :

- run_suite with multiple run blocks

1run_suite "suite_1"{

2 command = plan

3 run {....}

4 run {....}

5 run {....}

6}

- Continue with a single run block and multiple assert blocks, but have a mechanism to provide a name or identifier for the assertion so that the output of the tests showcase that..

1run "suite_1" {

2 assert {

3 name = "test1"

4 .....

5 }

6 assert {

7 name = "test2"

8 .....

9 }

10}

Associated GitHub issue :terraform/34759

Test reports

The current test output prints the results into a standard out like your plans and apply stages. You do have an option to add a -json flag to make it return a json representation of the results, which is noisy compared to the succint results which are currently present.

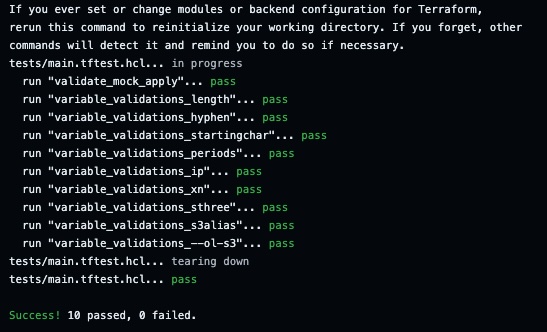

Successful runs:

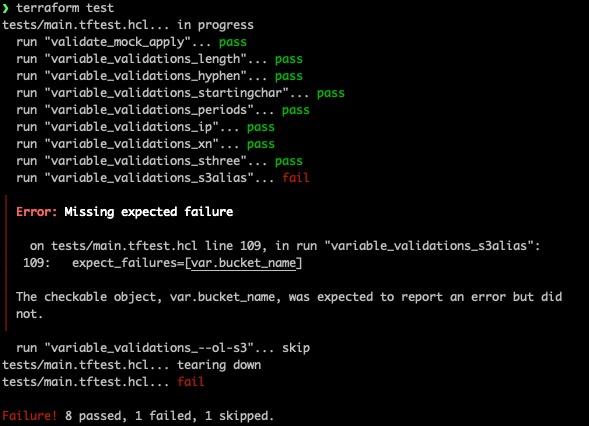

Failures:

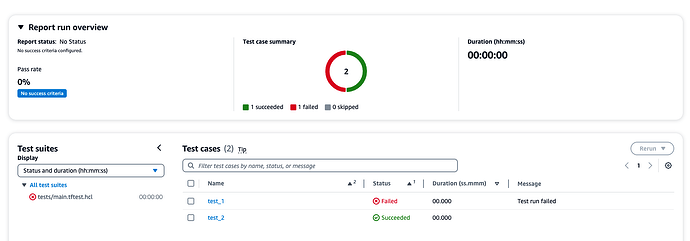

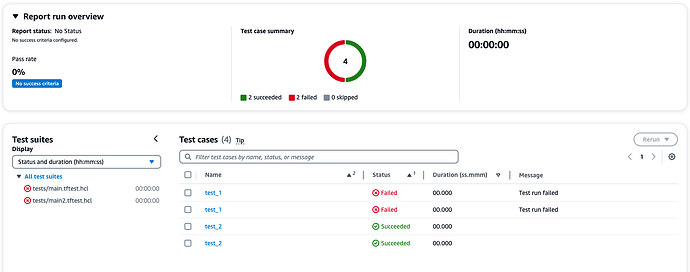

I would still love to see a Junit compatible exportable option like the one in the [1.8.0-alpha] (https://github.com/hashicorp/terraform/releases/tag/v1.8.0-alpha20240228). Some early experiments around this resulted in these reports like the ones below in CodeCatalyst which I was using at that point in time.

Conclusion

In all fairness, I haven’t even covered the module level intricacies and mocking elements in the test framework which could do with a post of its own. I am personally delighted to have the framework be available. The mocking aspects would come in handy in case of infrastructure components which take a few minutes to provision(EKS for example). If you haven’t used it yet, I strongly urge you to upgrade to a version above 1.6.0 and play around with it.